Avoid These 10 Frequent Mistakes in AI Development

Developing a robust AI system requires thorough preparation, continuous iterations, and ongoing monitoring. This intricate process is susceptible to numerous challenges that can lead to suboptimal results, inefficient resource usage, and significant difficulties.

To help you build the best AI model possible, here are 10 common mistakes in AI development that you should avoid:

Table of content:

1. Balancing Training: Too Much or Too Little

2. Training AI Models on Unrealistic Data

3. Failing to Address Biases

4. Ignoring Model Understandability

5. Neglecting Continuous Monitoring

6. Underfitting or Overfitting AI Models

7. Inadequate IT Infrastructure Investment

8. Addressing Bias in Data and Algorithms

9. Continuous Monitoring of AI Models

10. Selecting the Right AI Partner

1. Balancing Training: Too Much or Too Little

Overfitting happens when a model learns the training data too well, leading to poor performance on test and real-world data. It is akin to rote memorization instead of applied learning, and overfitted models fail to generalize effectively. Excessive training on the training dataset can cause overfitting.

Regularization techniques like L1 (Lasso) and L2 (Ridge), which add a penalty term to the loss function, can help constrain model coefficients and prevent them from becoming too large. Conversely, insufficient training can cause underfitting, where the model's simplistic understanding fails to capture the data's underlying attributes. An underfitted model performs poorly with real-world data.

To combat underfitting, consider using a more complex model, additional features, more data, or even synthetic data. Increasing the number of parameters in a neural network or the maximum depth in a decision tree can also help.

2. Training AI Models on Unrealistic Data:

Researchers often train and test models using clean, well-labeled datasets that do not generally reflect real-world data distributions. Consequently, models exhibit impressive "in-distribution" performance, where test data shares the same distribution as training data.

However, real-world data ("out-of-distribution" data) typically differs from the training set. This data may be noisier, less clearly labeled, or include unfamiliar classes and features, leading to a significant drop in model performance upon deployment. This is known as "out-of-distribution" performance.

To address this, there's a growing emphasis on "robust AI," which focuses on developing models that maintain performance even with out-of-distribution data. Techniques like domain adaptation adjust model predictions to better align with new data distributions.

3. Failing to Address Biases:

Bias in AI models arises when algorithms make systematic errors or unfair decisions due to prejudices in the training data or model design. Since humans create AI models, they can inherit human biases.

Unchecked biases can cause the model to learn and perpetuate unfair patterns, systematically disadvantaging specific data points. To combat bias, it's crucial to establish guidelines, monitor and review the model, and share data selection and cleaning processes to ensure biases are identified and addressed.

4. Ignoring Model Understandability:

It's easy to set up a model, run it, and hope it performs as expected. However, for AI to be trusted and adopted, its decisions must be transparent, understandable, and explainable, aligning with responsible AI and ethical standards. Neural networks are often termed black boxes due to their complex inner workings, making it difficult to understand why they produce specific outputs.

Scientists are developing techniques to make complex AI models more transparent and understandable, like deep neural networks. Methods such as attention mechanisms or saliency maps highlight important input parts influencing the model's decisions, allowing users to see which aspects had the most impact.

Thorough documentation is the best way to maintain transparency and ensure ease of understanding for an AI model. This documentation should cover all aspects of the data used to train the model, including its sources, quality, and any preprocessing steps applied. Comprehensive documentation clarifies the model's foundation and the steps taken to refine the data. This level of detail contributes to a better understanding of the AI model and instills confidence in its decision-making process.

5. Neglecting Continuous Monitoring:

Daily changes in data and underlying patterns can make models outdated or less accurate. Factors such as evolving consumer behaviors, emerging market trends, competitive shifts, policy changes, or global events like a pandemic can cause these changes, a phenomenon known as concept drift.

Monitoring model performance over time is crucial for companies that continuously predict product demand. While a model may initially provide accurate predictions, its accuracy can degrade over time due to real-life data changes. To address this, companies must maintain regular real-time monitoring by tracking the model's outputs against actual demand and continuously monitoring performance metrics.

Additionally, employing incremental learning techniques is essential. This approach allows the model to learn from new data while retaining valuable knowledge from previously observed data. By adopting these strategies, companies can effectively adapt to concept drift, ensuring accurate predictions for product demand without disregarding prior valuable information.

6. Underfitting or Overfitting AI Models:

Two prevalent errors businesses make when developing AI models are underfitting and overfitting. Underfitting occurs when the AI model is too simplistic to capture all patterns in the data, resulting in missed insights and opportunities. This happens when the model is not complex enough to handle large datasets effectively.

Overfitting happens when the model is overly complex, tailored exclusively to handle specific data types. This limits the model's ability to generalize and learn from different datasets, producing limited results.

To avoid these extremes, businesses should establish clear parameters for AI model development and adhere to necessary regulations to ensure balance. Partnering with AI firms can help achieve this equilibrium.

7. Inadequate IT Infrastructure Investment:

AI systems are prone to malfunctions without the appropriate IT infrastructure. Relying on outdated legacy systems hinders the development of essential IT infrastructure necessary for the practical functionality of AI tools. This can lead to increased operational costs, slower results, and inaccurate insights or predictions.

Businesses should be prepared to overhaul their IT infrastructure, incorporating advanced technologies (both on-premises and cloud) to create an environment conducive to AI model success.

8. Addressing Bias in Data and Algorithms:

Bias is a significant concern in AI model training. AI algorithms learn from the datasets provided, and if these datasets are biased, the algorithms will reflect the same biases.

Organizations should recognize the existence of biases within datasets to effectively identify and address gender, religious, political, and racial biases. It is crucial to include diverse datasets that represent broader and marginalized communities. Regular testing of AI models for bias is essential to make necessary corrections during development.

9. Continuous Monitoring of AI Models:

Ongoing monitoring and maintenance are critical for ensuring AI models function correctly. Resources should be allocated to monitor AI systems after continuous testing and validation.

This continuous process ensures AI models are retrained when new data becomes available or business KPIs change. Neglecting AI management and maintenance can lead to outdated insights that adversely affect business decisions.

10. Selecting the Right AI Partner:

AI adoption can fail if businesses do not select the right AI partner carefully. With numerous AI service providers available, it is essential to understand their offerings, domain expertise, knowledge, pricing, and project portfolio. Inadequate research before hiring a consulting company can lead to misaligned ideas and expectations.

Even if a service provider has a good reputation, they may not be the best fit for specific business needs. Reviewing service offerings and testimonials and considering long-term goals is crucial before finalizing an AI partner. Frequent changes in partners can lead to complications.

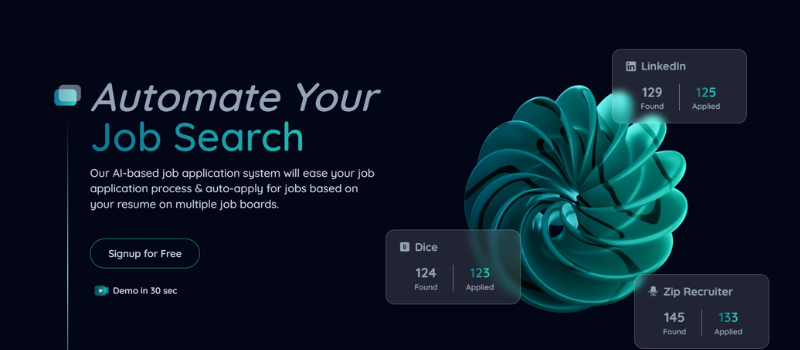

>>>>> Head over to Bulkapply.ai and Apply 100 of matching jobs with our AI tool. It's time to find your dream job.<<<<<

Explore the next generation of job application automation. Designed to streamline your job search process effortlessly. Check auto-applied jobs, track your progress, and manage your applications efficiently.

"A harmonious relationship between AI developers and researchers is the cornerstone of groundbreaking AI advancements. It’s a symbiotic partnership where mutual respect, open collaboration, and a shared vision are paramount. When these elements converge, AI teams are empowered to push the boundaries of innovation and create transformative solutions." — Dr. Priya Patel, Head of AI Research

Developing the Best AI Model:

Successfully navigating AI development is not straightforward. It requires careful consideration, dealing with uncertainties, and avoiding potential pitfalls. With attention to detail, a commitment to ethical practices, and robust methodologies, we can create AI solutions that are effective and efficient but also responsible and ethical.

As the demand for GPU resources continues to surge, especially for AI and machine learning applications, ensuring the security and ease of access to these resources has become paramount.